I was trying to do this last night and just don't know wtf to do. I found this repo that converts the IDX file format to a numpy array and saves the resultant image;

https://github.com/sadimanna/idx2numpy_array (I had to change 'imsave' to imageio.imwrite, and change some directories to fit my path)

So I've ran all that, and now have this directory full of these images. (When it was running, it'd say. `Lossy conversion from int64 to uint8. Range [0, 255]. Convert image to uint8 prior to saving to suppress this warning.` I assume this is doing the 'divide by 255` for me, in the preprocessing step, automatically?)

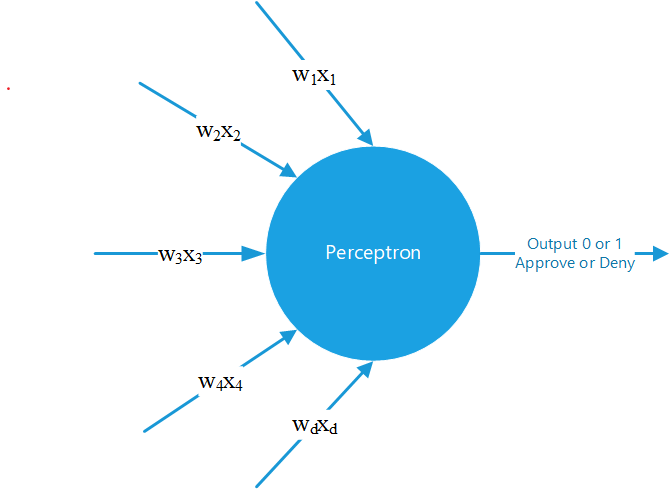

Anyway, so I have this data, and I have a Perceptron, here:

https://dpaste.com/2YPYRHNVG, also (basically the same code): https://dpaste.com/BC2DCGQLM

So I should have a numpy array, training and test data, and a Perceptron, so I should be good to go. Thanks for the help. Just kidding, I really don't know how to proceed from here. I believe I've set the weights and bias node properly (see updated dpaste below). Basically, I don't know how to interact with my Perceptron to proceed here, I don't know how to identify or load the targets, the data or how to run it.

Current Perceptron: http://dpaste.com/7KTT4B5L4

Previously I was doing stuff like what can be seen here, very basic and just copied from my book: http://dpaste.com/AXU8LYGHR

Would really appreciate someone holding my hand through some of this until I get rolling

I'm doing one of the assigments from Duke University Course - Introduction To Deep Learning

I'm trying to create a classifier with MNIST, but I dont want to use the Class implementation with nn.module, this is my code:

import numpy as np

import matplotlib.pyplot as plt

import torch

from tqdm.notebook import tqdm

from torchvision import datasets, transforms

import torch.nn.functional as F

mnist_train = datasets.MNIST(root="./datasets", train=True, transform=transforms.ToTensor(), download=True)

mnist_test = datasets.MNIST(root="./datasets", train=False, transform=transforms.ToTensor(), download=True)

train_loader = torch.utils.data.DataLoader(mnist_train, batch_size=100, shuffle=True)

test_loader = torch.utils.data.DataLoader(mnist_test, batch_size=100, shuffle=False)

data_train_iter = iter(train_loader)

images, labels = data_train_iter.next()

# Randomly initialize weights W

W1 = torch.randn(784, 500)/np.sqrt(784)

W1.requires_grad_()

# Initialize bias b as 0s

b1 = torch.zeros(500, requires_grad=True)

# Randomly initialize weights W

W2 = torch.randn(500, 10)

W2.requires_grad_()

# Initialize bias b as 0s

b2 = torch.zeros(10, requires_grad=True)

# Optimizer

optimizer = torch.optim.SGD([W2,b2], lr=0.1)

# Iterate through train set minibatchs

for images, labels in tqdm(train_loader):

# Zero out the gradients

optimizer.zero_grad()

# Forward pass

x = images.view(-1, 28*28)

# Linear transformation with W and b

z1 = torch.matmul(x, W1) + b1

A1 = F.relu(z1)

# Linear transformation with W and b

y = torch.matmul(A1, W2) + b2

cross_entropy = F.cross_entropy(y, labels)

# Backward pass

cross_entropy.backward()

optimizer.step()

correct = 0

total = len(mnist_test)

with torch.no_grad():

# Iterate through train set minibatchs

for images, labels in tqdm(train_loader):

# Forward pass

x = images.view(-1, 28*28)

# Linear transformation with W and b

z1 = torch.matmul(x, W1) + b1

A1 = F.relu(z1)

# Linear transformation with W and b

y = torch.matmul(A1, W2) + b2

predictions = torch.argmax(y, dim=1)

correct += torch.sum((predictions == labels).float())

print('Test accuracy: {}'.format(correct/total))

I know I'm just training the out layer parameters, the question is: How Can I Tra

... keep reading on reddit ➡https://github.com/Achilles446/NeuralNetwork-and-Perceptron-for-Processing

This project contains two classes, the neural network (NN) class, and perceptron (Perceptron) class. It's super easy to implement, and I included an example for flappy bird using the Perceptron class. Documentation is in the README file.

I had a really hard time finding a repository for neural networks in Processing, so I hope this helps anyone trying to do a project of their own. Feel free to message me if you have any questions about use. If you use this on a project, I'd appreciate linking the GitHub link somewhere. It's not necessary but it goes a long way.

Enjoy!

TL;DR: Logistic regression and perceptrons seem really, really similar at first glance. But the more I think about them mathematically, the former seems all about projection onto the linear decision boundary, whereas the latter seem more about location relative to the boundary. This seems like a fundamental difference. Either one conception is wrong, or else there’s some equivalence between these conceptions that I’m not seeing.

Please forgive me while I flounder to put my thoughts into words. I want to understand the maths underlying neural networks, and to do so, I'm starting by trying to understand the relationships between the algorithms that came before. But I've hit a wall when trying to see similarities between logistic regression and perceptrons. I'll try to walk you through my thinking.

First logistic regression (LR). Studying the formula, LR essentially seems simply like a linear regression passed through a nonlinear sigmoid activation function. The activation function's purpose is to squash the linear regression's range between 0 and 1. This asymptotically bounded range allows us to interpret the LR's output as a probability, namely the probability that the input belongs to the positive class. Because we're just transforming a straight line to a squiggly one, I believe this can be interpreted as projecting the input feature vector onto the linear regression line (just like in linear regression), then compressing the range. Therefore, in LR, the output is a direct reflection of where the inputs fall on the line.

Second, perceptrons. Zooming out, perceptrons seem pretty much mathematically identical to LR, with one change: the activation function is not a sigmoid, but rather a step function. This means that whereas a LR's output will be a real value ranging continuously between 0 and 1, a perceptron's output is only ever 0 or 1 (or equivalently, -1 or 1), signifying whether the input is (1) or isn't (0, -1) a member of the positive class. Thus, the output of LR and perceptrons are analogous, except that because LR produces a continuous output, it also encodes the "confidence" of its classification decision, whereas perceptrons simply give you the decision.

Please stop me here if anything so far is incorr

... keep reading on reddit ➡

#CenterPrime #Aniverse #Blockchain #FinTech #Oracle #DeFi #NFT

Many companies are jumping into the development of technology to form the NFT market since the NFT is becoming known to the general public beyond the cryptocurrency industry. In an environment where NFT tokenization of various content such as video, music, and art is continuously being happening, CenterPrime has partnered with Aniverse to build and develop Aniverse NFT software.

“ DeFi Dashboard for the Enterprise”

As the DeFi economy starts to stand out, the traditional financial bank sector is introducing the fiduciarization of the bitcoin and ethereum. For example, on 11 February 2021, the New York Mellon bank(BNY Mellon), the United States global custody bank decided to handle digital virtual assets such as bitcoin and ethereum. Following the visa card, the master card also decided to include some part of cryptocurrencies in the payment system on 11 January 2021, and the financial authorities of Canada approved the bitcoin exchange-traded fund (ETF) of the Purpose Investment, the asset management corporation for the first time.

#CenterPrime #Blockchain #FinTech #DeFi #Oracle #Operator

Read more ▶ Operator. “ DeFi Dashboard for the Enterprise” | by CenterPrime | CenterPrime | Feb 2021 | Medium

“New Oracle DeFi Wallet”

In 2021, change in the blockchain market is occurring globally, and countries, institutions, and enterprises to try to focus on and utilizing this change are increasing. Especially, on blockchain technology and investment. As of February 17, 2021, from CBDC, the digital currency issued by the country to the rise of Bitcoin, which exceeded $50,000 and the investment of numerous celebrities supporting it, currency digitalization is in earnest.

#CenterPrime #Blockchain #Defi #Oracle #FinTech #Wallet

Read more ▶ Oracle Perceptron Wallet. “New Oracle DeFi Wallet” | by CenterPrime | CenterPrime | Feb 2021 | Medium

I have been trying to connect math concepts with neural network mechanics. For example: Why do we even linearly tranform input vector by weights? In RNN, why transform hidden vector as well as input vector and add them?

Wh^t-1 + Wx^t

But things are quite confusing for me and of very little resource that explains this.

Further explaining my question, suppose we have a perceptron that models AND operator. We have one input and output layer. We have 2 input with 4 combination. We have 2 weight. I think we can model this problem as system of linear equation as following which we can use perceptron to solve.

1x + 1y = 1

0x + 1y = 0

1x + 0y = 0

0x + 0y = 0

where x and y are weights in terms of perceptron and a solution/variable we need to find out in terms of system of equation

- Am I going in the right direction? Is my assumption correct?

- Can this assumption can be applied to all the neuron in deep structured neural network?

“Preparing for Network Hacking by Beefing Up Security of DeFi Services.”

Even without a third-party agency and legal system to guarantee the funds, many people have started to participate in DeFi services powered by blockchain networks. The reason for DeFi’s growth is the advantages of decentralization, efficiency, and the fact that anyone can participate while also fixing the disadvantages of the complex traditional finance system. However, this has led to continuous problems of hacking because of the openness and weak security of DeFi.

#CenterPrime #Blockchain #FinTech #DeFi #Oracle #Explorer

Hello i want to create a neural network 4-2-1 topology, 4 inputs, the hidden layer consists of 2 neurons and one output. The activation functions are step functions. I already know the weights and the step function thresholds. How do i get started and get to know what commands should i be using? I already checked the matlab's help page.

TL;DR: Logistic regression and perceptrons seem really, really similar at first glance. But the more I think about them mathematically, the former seems all about projection onto the linear decision boundary, whereas the latter seem more about location relative to the boundary. This seems like a fundamental difference. Either one conception is wrong, or else there’s some equivalence between these conceptions that I’m not seeing.

Please forgive me while I flounder to put my thoughts into words. I want to understand the maths underlying neural networks, and to do so, I'm starting by trying to understand the relationships between the algorithms that came before. But I've hit a wall when trying to see similarities between logistic regression and perceptrons. I'll try to walk you through my thinking.

First logistic regression (LR). Studying the formula, LR essentially seems simply like a linear regression passed through a nonlinear sigmoid activation function. The activation function's purpose is to squash the linear regression's range between 0 and 1. This asymptotically bounded range allows us to interpret the LR's output as a probability, namely the probability that the input belongs to the positive class. Because we're just transforming a straight line to a squiggly one, I believe this can be interpreted as projecting the input feature vector onto the linear regression line (just like in linear regression), then compressing the range. Therefore, in LR, the output is a direct reflection of where the inputs fall on the line.

Second, perceptrons. Zooming out, perceptrons seem pretty much mathematically identical to LR, with one change: the activation function is not a sigmoid, but rather a step function. This means that whereas a LR's output will be a real value ranging continuously between 0 and 1, a perceptron's output is only ever 0 or 1 (or equivalently, -1 or 1), signifying whether the input is (1) or isn't (0, -1) a member of the positive class. Thus, the output of LR and perceptrons are analogous, except that because LR produces a continuous output, it also encodes the "confidence" of its classification decision, whereas perceptrons simply give you the decision.

Please stop me here if anything so far is incorr

... keep reading on reddit ➡TL;DR: Logistic regression and perceptrons seem really, really similar at first glance. But the more I think about them mathematically, the former seems all about projection onto the linear decision boundary, whereas the latter seem more about location relative to the boundary. This seems like a fundamental difference. Either one conception is wrong, or else there’s some equivalence between these conceptions that I’m not seeing.

Please forgive me while I flounder to put my thoughts into words. I want to understand the maths underlying neural networks, and to do so, I'm starting by trying to understand the relationships between the algorithms that came before. But I've hit a wall when trying to see similarities between logistic regression and perceptrons. I'll try to walk you through my thinking.

First logistic regression (LR). Studying the formula, LR essentially seems simply like a linear regression passed through a nonlinear sigmoid activation function. The activation function's purpose is to squash the linear regression's range between 0 and 1. This asymptotically bounded range allows us to interpret the LR's output as a probability, namely the probability that the input belongs to the positive class. Because we're just transforming a straight line to a squiggly one, I believe this can be interpreted as projecting the input feature vector onto the linear regression line (just like in linear regression), then compressing the range. Therefore, in LR, the output is a direct reflection of where the inputs fall on the line.

Second, perceptrons. Zooming out, perceptrons seem pretty much mathematically identical to LR, with one change: the activation function is not a sigmoid, but rather a step function. This means that whereas a LR's output will be a real value ranging continuously between 0 and 1, a perceptron's output is only ever 0 or 1 (or equivalently, -1 or 1), signifying whether the input is (1) or isn't (0, -1) a member of the positive class. Thus, the output of LR and perceptrons are analogous, except that because LR produces a continuous output, it also encodes the "confidence" of its classification decision, whereas perceptrons simply give you the decision.

Please stop me here if anything so far is incorr

... keep reading on reddit ➡