A list of puns related to "Inference Engine"

Traditionally, AI models were run over powerful servers in the cloud. Implementing “on-device machine learning,” like using mobile phones, is rarely heard of. This lack of mobile-based implementation can be attributed mainly to the lack of storage memory, compute resources, and power required for using AI models. Despite these limitations, mobile-based AI implementation can be pretty helpful under some problem scenarios.

For achieving the goal of implementing mobile-based AI models, Microsoft has recently released ONNX Runtime version 1.10, which supports building C# applications using Xamarin. Xamarin is an open-source platform for building applications using C# and .NET. This is likely to aid developers in building AI models over Android or iOS platforms. This new release enables the building of cross-platform applications using Xamarin.Forms. Microsoft has also added an example application in Xamarin, which runs a ResNet classifier using ONNX Runtime’s NuGet package in Android and iOS mobiles. For understanding the detailed steps for adding the ONNX runtime package and learning about Xamarin.Forms applications, one can take a look here.

Hello, I have a question about quantization. I'm trying to write an inference thing manually. I've already written a working version using float32. It is an image processing network, so the size of intermediate layers is mostly effected by the resolution of the input. I wanted to try to use Int8 instead of Float32 to see how well it worked. In pytorch, when I convert to quantized int, it only converts the weights, not the bias. Intuitively, I think I would need the weight, bias, _and_ input data to be in Int8 to run the network with the smaller memory usage. Is this intuition true? If so, how should I convert the bias to int8?

I am trying to understand better the concept of interference in this space and what the capabilities and responsibility are and are not.

I have a simple schema. A pseudo-representation is:

CLASS: MyClass

PROPERTY: name

PROPERTY: item

This can be expressed with the following RDF triples

@prefix ex: <http://example.org/> .

@prefix rdf: <http://www.w3.org/1999/02/22-rdf-syntax-ns#> .

@prefix rdfs: <http://www.w3.org/2000/01/rdf-schema#> .

@prefix sch: <http://schema.org/> .

ex:MyClass a rdfs:Class ;

ex:item a rdf:Property ;

sch:domainIncludes ex:MyClass ;

sch:rangeIncludes sch:Text ;

ex:name a rdf:Property ;

sch:domainIncludes ex:MyClass ;

sch:rangeIncludes sch:Text ;

Assume that ex:item will be a list of one or more strings. Assume that ex:name will be unique and singular.

Let's say that I create the following data instance based on this schema and insert it into a DB.

@prefix ex: <http://example.org/> .

ex:77f034d a ex:MyClass ;

ex:item "item 1",

"item 2",

"item 3" ;

ex:name "GroupOfItems..A" .

And then later, I insert this data into the same database:

@prefix ex: <http://example.org/> .

ex:f03358e a ex:MyClass ;

ex:item "item 4" ;

ex:name "GroupOfItems..A" .

What is obvious and intended is when asking for the group of item related to GroupOfItems..A is to get back item 1, item 2, item 3, and item 4.

As I understand it, an inference engine will create facts (a new triple) based on the known facts.

In this specific case, the triple(s) that should be created are:

ex:77f034d ex:item "item 4";

or

ex:f03358e ex:item "item 1";

ex:f03358e ex:item "item 2";

ex:f03358e ex:item "item 3";

Is this what an inference engine does?

Can anyone explain in more detail how it would do this?

Is there something missing from the schema that would allow these inferences to be made?

Is this kind of inference rule something I would implement myself?

All thoughts and comments are appreciated.

Hi everyone! Super excited to open-source Neuropod today!

It's an abstraction layer on top of existing Deep Learning frameworks (such as TensorFlow and PyTorch) that powers hundreds of models across Uber ATG, Uber AI, and the core Uber business.

I lead the development of Neuropod and am happy to answer any questions :)

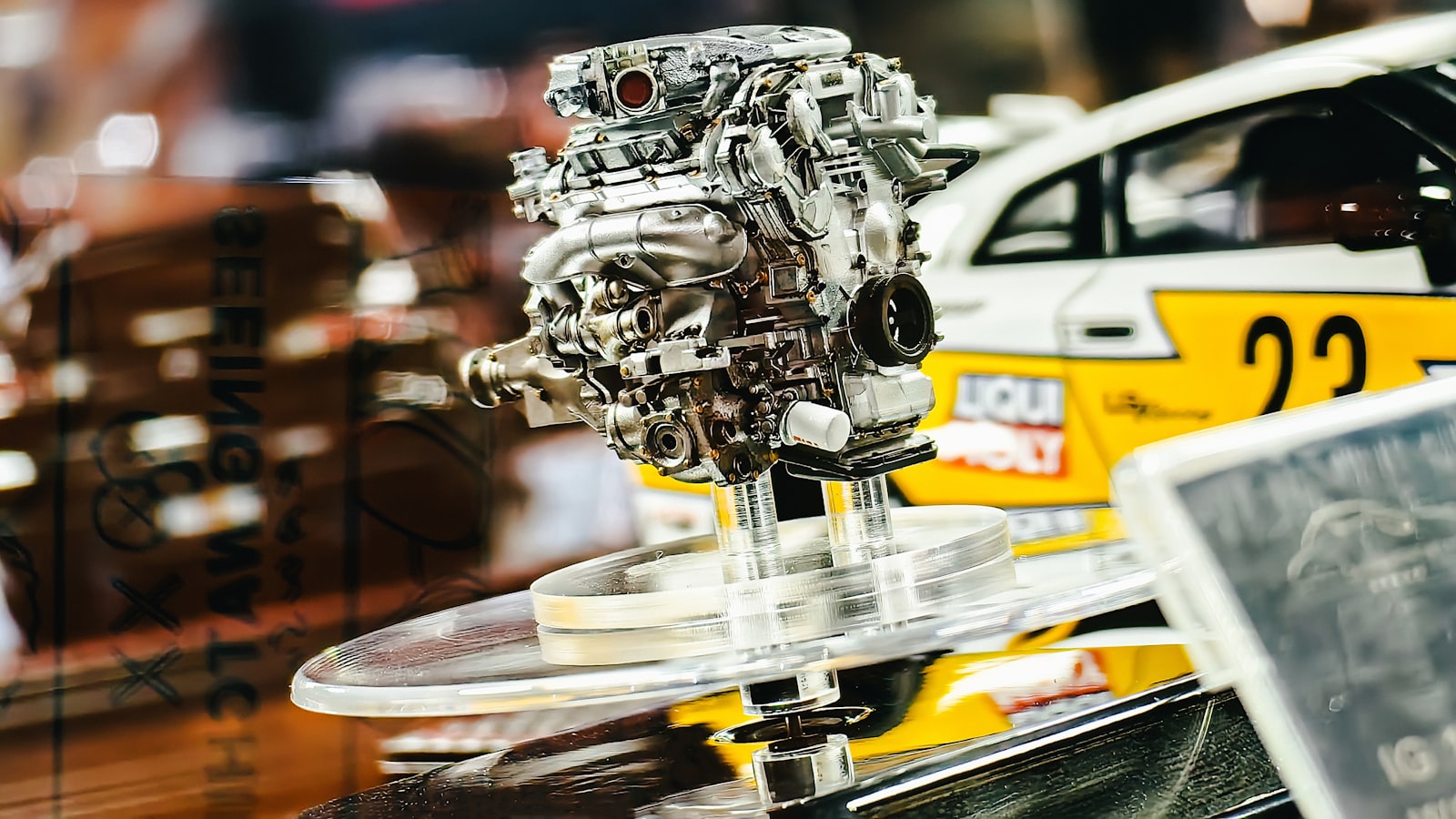

I've been thinking whether my 1994 3s-ge 2.0l 16v engine is inference or non inference engine, and I can't seem to find a reliable source on the internet. Does anyone know?

https://i.imgur.com/AEnLxK3.png

This can be thought of as a Bert-based search engine for computer science research papers.

https://thumbs.gfycat.com/DependableGorgeousEquestrian-mobile.mp4

https://github.com/Santosh-Gupta/NaturalLanguageRecommendations

Brief summary: We used the Semantic Scholar Corpus and filtered for CS papers. The corpus has data on papers' citation network, so we trained word2vec on those networks. We then used these citation embeddings as a label for the output of Bert, the input being the abstract for that paper.

This is an inference colab notebook

https://colab.research.google.com/github/Santosh-Gupta/NaturalLanguageRecommendations/blob/master/notebooks/inference/DemoNaturalLanguageRecommendationsCPU_Autofeedback.ipynb#scrollTo=wc3PMILi2LN6

which automatically and anonymously records queries, that we'll just to test future versions of our model against. If you do not want to provide feedback automatically, here's a version where feedback can only be send manually:

https://colab.research.google.com/github/Santosh-Gupta/NaturalLanguageRecommendations/blob/master/notebooks/inference/DemoNaturalLanguageRecommendationsCPU_Manualfeedback.ipynb

We are in the middle of developing much more improved versions of our model; more accurate models which contain more papers (we accidentally filtered a bunch of important CS papers in the first version), but we had to submit our initial project for a Tensorflow Hackathon, so we decided to do an initial pre-release, and use the opportunity to perhaps collect some user data in further qualitative analysis of our models. Here is our hackathon submission:

https://devpost.com/software/naturallanguagerecommendations

As a sidequest, we also build a TPU-based vector similarity search library. We are eventually going to be dealing with 9 figures of paper embeddings of size 512 or 256. TPUs have a ton of memory, and are very fast, so it might be helpful when dealing with a ton of vectors.

https://i.imgur.com/1LVlz34.png

https://github.com/srihari-humbarwadi/tpu_index

Stuff we used: Keras / Tensorflow 2.0, TPUs, SciBert, HuggingFace, Semantic Scholar.

Let me know if you have any questions.

Rideshare giant Uber continues to explore deep learning’s potential to provide safer and more reliable self-driving technologies. This week, the Uber Advanced Technologies Group (ATG) released Neuropod, an open-source library that provides a uniform interface for running deep learning (DL) models from multiple frameworks in C++ and Python.

Here is a quick read: Uber ATG Open-Sources Neuropod DL Inference Engine

Implika is a Javascript library made of rule-based inference engine. The only operator used in input is a implication which operates on three types of expressions: constants, schematic variables, and implications recursively. These elements are just enough to derive all the other operators and data structures we may find useful in computational field. Output of the inference engine includes assertion of all the implicit information that can be derived from input set of mutually correlating rules. One of the surprises about Implika is in its code size: the whole pattern matching and inference system is implemented in only about 150 lines of Javascript code. Nevertheless, this does not diminish ability of Implika to represent a Turing complete system.

Implika input language is a kind of s-expression:

s-exp := () | constant | @variable | (s-exp s-exp)

where the left s-expression implies the right s-expression in (s-exp s-exp). The only rule of inference is modus ponens.

Read about it here: https://github.com/e-teoria/Implika

Test it in dedicated UI here: https://e-teoria.github.io/Implika/test

I've never understood the benefits of a timing belt over a chain. They require more (costly) maintenance, and when they break on an interference engine (which I also don't see the benefits of), you're pretty much SOL. Is there a valid reason (other than marginal fuel economy gains) why a manufacturer would have both, other than a sort of planned obsolescence?

The only alternative I can see is that it's meant as a way for dealerships to make a bit more money on routine maintenance checks-- kind of like how manufacturer's oil change intervals changed after dealers started offering lifetime scheduled maintenance.

Me and my friend built a CNN Inference engine in C++ with AVX as a part of a competition where the objectives were:

Obviously we did not win because we were a couple of microseconds behind the winner, however we achieved all of the objectives and thought we would share it with you all to take your feedback and for you to explore it and use it.

Here is the link to the repo: https://github.com/amrmorsey/Calibre

Edit: Here is our benchmarking results compared with Caffe build with ATLAS and with openBLAS:

Note: Given that there are no ports to the NVIDIA/Caffe branch on Windows or Mac , all of our benchmarking was on Linux. Also, Caffe with ATLAS was only benchmarked on Linux. Here are the results of the benchmarking:

Machines:

Intel Core i5-6200U (Linux):

Intel Core i5-2500K(Linux):

Intel Core i5-2500K(Windows 10 32-bit):

Intel Core i5-2500K(Windows 10 64-bit):

Intel Core i7 (macOS Sierra):

The human ape brain is an inference engine comprised of a neural network built on neurotransmitters and neuroreceivers, electrically firing electrochemicals produced from nanofactories in the synapse, through the iron particle, salt water (blood) medium to the receptor transducer.

Stars are machines, self-assembling limited local fuel nuclear fusion reactors. Galaxies are comprised of stars, another type of dynamic machine. The physical universe comprised of stars and galaxies is a thermodynamic engine, a machine.

The human ape brain perceiving these machines is also a machine.

The answers only raise more questions.

Traditionally, AI models were run over powerful servers in the cloud. Implementing “on-device machine learning,” like using mobile phones, is rarely heard of. This lack of mobile-based implementation can be attributed mainly to the lack of storage memory, compute resources, and power required for using AI models. Despite these limitations, mobile-based AI implementation can be pretty helpful under some problem scenarios.

For achieving the goal of implementing mobile-based AI models, Microsoft has recently released ONNX Runtime version 1.10, which supports building C# applications using Xamarin. Xamarin is an open-source platform for building applications using C# and .NET. This is likely to aid developers in building AI models over Android or iOS platforms. This new release enables the building of cross-platform applications using Xamarin.Forms. Microsoft has also added an example application in Xamarin, which runs a ResNet classifier using ONNX Runtime’s NuGet package in Android and iOS mobiles. For understanding the detailed steps for adding the ONNX runtime package and learning about Xamarin.Forms applications, one can take a look here.

https://preview.redd.it/zsto5v9l9j781.png?width=1920&format=png&auto=webp&s=a3c6833ddd94a4a34b11d14b9e1b04ffd299c792

Rideshare giant Uber continues to explore deep learning’s potential to provide safer and more reliable self-driving technologies. This week, the Uber Advanced Technologies Group (ATG) released Neuropod, an open-source library that provides a uniform interface for running deep learning (DL) models from multiple frameworks in C++ and Python.

Here is a quick read: Uber ATG Open-Sources Neuropod DL Inference Engine

Rideshare giant Uber continues to explore deep learning’s potential to provide safer and more reliable self-driving technologies. This week, the Uber Advanced Technologies Group (ATG) released Neuropod, an open-source library that provides a uniform interface for running deep learning (DL) models from multiple frameworks in C++ and Python.

Here is a quick read: Uber ATG Open-Sources Neuropod DL Inference Engine

Please note that this site uses cookies to personalise content and adverts, to provide social media features, and to analyse web traffic. Click here for more information.